By Marka Ellertson

Cathy O’Neil believes that our blind faith in data must end.

In today’s world, there are two diverging trends in the relationship of scientific or mathematical thinkers to the general population. On the one hand, science is being devalued as a wave of anti-intellectualism sweeps our world. Climate change is denied by the US government, and massive cuts are made to the budget of researchers and STEM thinkers. This is why STEM communication is so important: if the world of science and math is only described and understood in math journals to other mathematicians, that knowledge will never become useful to the rest of the society to inform our actions, policy, or decisions. At the same time, there is another trend: an unwarranted trust in the data and numbers around us. This is based on an understanding of human psychology that has long been applied to advertising. Saying “some dentists recommend this toothpaste” is nowhere near as impactful as saying “9 out of 10 dentists recommend this toothpaste” that we often see instead. However, as more and more large systems, organizations, and companies implement computers and big data to help our society function, those numbers often remain uninterrogated.

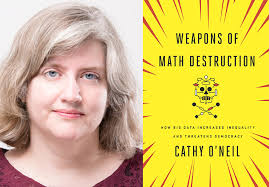

This is where Cathy O’Neil comes in. A mathematician herself, she wrote the book Weapons of Math Destruction which focuses on building an understanding in all readers of how the algorithms and data we rely on today can be biased, incorrect, and further increase social harms.

O’Neil’s college education began as an undergraduate at UC Berkeley. She went on to receive a PhD in mathematics from Harvard in 1999, and then spent time working in academia at both MIT and Barnard College. From there, her career progressed away from mathematical research to more practical applications of her skill set, namely in the world of finance. Living in New York City, she spent four years working on Wall Street, including two years at the hedge fund DE Shaw. However, O’Neil became disillusioned with this corporate world, and began to understand how the data processed in large companies like this one was not actually resulting in a better society. In 2011, she left Wall Street at the start of the Occupy movement, becoming involved with the Alternative Banking Group which sprung out of it. All this time, O’Neil had also been running her blog, mathbabe.org, which served at the time as a personal sounding board for her daily life and activities. She married another mathematician and had three sons in New York City.

O’Neil’s next big project after the Occupy protests would combine her interest and knowledge of math and algorithms with her increased understanding of social justice and how to spark change. First becoming an independent data consultant, she spent two years working on Weapons of Math Destruction, which was released in 2016 and nominated for the National Book Award for Nonfiction.

The thesis of O’Neil’s book goes back to her belief in the necessity of ending our faith in big data. As she points out, algorithms are everywhere. They separate, as she says, “the winners and the losers.” Some people, who algorithms favor, get credit cards, job offers, or decreased insurance rates. Others don’t even get a call back for an interview – or in other cases, will be held in prison with much higher bail set. O’Neil describes an algorithm as functioning on two things: data from the past; and a goal for the future. The system uses math to evaluate or predict based on this data and goal, creating an algorithmic system which may seem objective and fair but may in fact be causing harm.

This goal is one place in which bias can be introduced. To explain this, O’Neil gives an example of a dinner-choosing algorithm. In this system, the data includes the memories the chef has of good meals in the past, the food in the pantry, and the recipes which can be made with that food. So far, all is well. But the goal of the system can significantly change the outcome. For a parent, a dinner-choosing algorithm is successful when it chooses a healthy, balanced meal with lots of vegetables. For a kid, the algorithm is successful when it chooses Nutella and ice cream. This example makes the potential for bias clear: parents are the ones who have power in the situation, so they are able to define success. In this way, algorithms are not objective like we are taught to think they are. Instead, they are based heavily on the opinions and desires of the people who coded them.

Another issue O’Neil brings up in her work is the bias that can be inserted in an algorithm due to faulty data. In her dinner example, the data used was the contents of the pantry, but the chef could choose to exclude certain data points, such as the cookie supply, if they decided that was not relevant or necessary to the outcome. Another real world example O’Neil uses is the sexism present at Fox News, where founder Roger Ailes was recently fired after two decades because more than twenty women came forward accusing him of harassment and limiting their careers and success at the company. Fox tried to rectify the situation by changing the hiring process away from sexist men and instead using an algorithm which would “objectively” evaluate the candidates for a given position. The goal of the algorithm was to find the most qualified candidate, which makes sense. Fox News developed a data set of previous successful employees to let the algorithm match resumes with. Fox decided to describe a successful employee as someone who, in the 21 years at the company, was employed for at least four years and promoted at least once. This too seems to make sense. However, the history of discrimination and harassment at the company meant that women had had a much higher turnover rate than men, and were less likely to stay for a long period of time. Furthermore, women were less likely to receive a promotion because of the company culture of sexism. As it was relying on this data, it is no wonder that the algorithm suggested the most qualified applicants for hiring were men – they matched that profile of successful prior workers at Fox News. Yet because algorithms seem truly objective and scientific to most observers, it was harder to understand and protest this new form of math-based discrimination.

In an even more obvious example of the harm bad data can cause, O’Neil brought up a thought experiment in a fictional city. In this city, the entire West side of the city is white and the entire East side is people of color. The city starts with one police officer who patrols the East side, catching a few criminals who are of course people of color in that community. The city then wants to expand their police force, and feeds the arrest data they have into an algorithm to find out where the most crime is. Seeing that so far every arrest has been in the East, this computer suggests more and more officers are sent only to that neighborhood. Clearly, police departments do not concentrate their entire forces into single areas in reality, but it is true that communities of color are far more heavily policed in residential areas and schools than white areas. Furthermore, algorithms are today used to inform how bail is set and what jail sentences should be for criminals in some areas. Although the judges are not given an understanding of how and why the computer is calculating this “recidivism risk,” factors which are often racialized (such as geographic location, income level, and family) are included in the data used to make these life changing decisions.

O’Neil has become famous in the STEM world for her work uncovering the bias behind algorithms which run our country. She continues to try to expose how data scientists can use black box algorithms to hide information from people and then call it objective or meritocratic. She describes how many of these algorithms are actually written by private companies and then sold to our government, so it is legal for them to hide from the public what goals and data are being used to inform supposedly scientific decisions.

Today, O’Neil has taken her role to a practical level. Instead of addressing only the flaws in STEM communication through her writing, she also has formed ORCAA, an algorithmic auditing company. This includes four components. First, a data check, which interrogates the sources and inclusion of various data points and considers the bias which may be present. Secondly, she considers the definition of success. In the Fox News example, a successful employee was also an employee supported by the sexism in the culture. A counterexample to this would be orchestra auditions, which are conducted behind a curtain hiding the identity of the musician. Orchestras decided that the only relevant indicator of success to them was the quality of the play, and as this policy was implemented the number of women increased fivefold. Third, there is an evaluation of accuracy. How has the algorithm as it exists been performing, and what is the cost of a mistake? And finally, O’Neil says she tries to think about the long term impact of algorithms. While this last point can be much more abstract, she thinks about the dangerous feedback loops and news bubbles created on Facebook when people are only exposed to their friends’ views. If the algorithm used by Facebook had been interrogated in this way, perhaps this issue would not exist. Conducting these audits, for clients such as the Illinois Attorney General’s Office or Consumer Reports, has become O’Neil’s day to day work.

Besides running algorithmic auditing at ORCAA, O’Neil remains active in her role as a STEM communicator, writing about algorithm harm and issues surrounding math research and education on her personal blog and for Bloomberg View as a regular contributor, as well as writing occasionally for other publications such as the New York Times. In her personal life, O’Neil lives with her family in New York City, and has recently taken up a new hobby of running, having completed her second 5K race in early 2018.

Personal Reflection:

Cathy O’Neil is a very inspiring STEMer to me. Especially while a student at Lick, I have developed a genuine interest in understanding the social inequity around me and attempting to understand how to rectify this. O’Neil has done something incredible, which was to allow her personal experiences as a mathematician and in the Occupy movement to inform her career addressing social ills. Often, the math I have learned in school feels like a dead end or irrelevant to the rest of the world outside the math community, but learning about O’Neil’s work helps me understand how mathematical thinking and algorithms are truly ingrained in our society on many levels, and can in fact contribute to solving or creating systems of injustice. While I often find my passions and interests lie in history and social studies, I share this hope to understand and help solve social problems in common with Cathy O’Neil, and I am inspired by the career she has built in trying to fix them.