It is no secret that Artificial Intelligence (AI) imaging technology is on the rise. In the past year, photo-editing giants such as Adobe, Microsoft and Google have all announced new AI technologies that will be applied to mobile phone images, offering services to alter users’ photos completely. These developments are the natural progression in a time when AI is growing at incredible speed and becoming more integrated into user experience daily. This growth leaves many users with the question: What happens when our photos become more perfect than real?

Photo editing has existed for nearly as long as photography itself. From hand-altering in the 1900s to cropping and splicing in the 21st century, photo editing has always consisted of an alteration or enhancement of existing objects in the photo itself. However, what makes this most recent AI development different is that photo editing has now become generative. Today, editing tools such as Adobe’s “Generative Fill” can take photos and construct something entirely new, changing the structure, narrative and significance of a previously captured moment.

Many popular companies have begun advertising this new editing technology to broader audiences, displaying the true power and influence that AI has gained. A recent Adobe advertisement depicts a mother, capturing her giggling son as his father lifts him and gently tosses him into the air. In the next frame, the mother clicks a button on her phone labeled “Magic Editor,” which allows her to isolate the boy on her screen and effortlessly drag him higher into the air and farther from his dad’s outstretched arms. With another tap, she adds contrast to the washed-out sky in the background, making the clouds pop. The boy is now soaring against an iridescent sky. A good shot has now become a great one.

The possibilities of AI photo editing can seem endless. The increased development of these technologies excites graphic design amateurs and professionals alike; something previously only available to experts has now become accessible to everyday people through their phones. Life’s moments can now achieve the same style and allure as those seen in magazines or on Instagram.

To many, however, these increasing developments in AI warrant some concern. In order to function, AI relies on complex algorithms that mimic human memory patterns to recognize and recall images. However, these algorithms are not flawless replicas of human memory processes and can be influenced by biases in training data. The human brain, which is susceptible to cognitive biases and visual cues, may be tricked into accepting altered images as authentic memories. “The cadence at which AI will bring change to our daily lives, including our information habits, is making everybody nervous and unbalanced,” Karen Silverman, member of the World Economic Forum’s AI Global Council, said. “That’s its own security and mental health risk.”

Generative AI changes not only the appearance of photos, but also the context, emotions and memories assigned to them. Likely, one was not actually bathed in perfect golden-hour light the first time they met their pet, yet that is the way they may recall the moment in their mind from an edited photo.

Over time, memories blend sensory information into something that is between real and something we feel. The process of synaptic pruning, a fundamental aspect of neural development, involves the elimination of excess synapses in the brain, refining neural circuits and optimizing efficiency. This means that by creatively editing their photos, one runs the risk of rewriting their memories entirely.

Beyond our psychologies alone, generative AI has the potential to affect our expectations for the future. If one remembers every cherished past experience as absolutely perfect, there is an expectation for future events to mimic that. Anything that does not appear as beautiful and idealized as our curated memories may seem lackluster and banal in comparison.

“I think there’s always been a desire among people to fit a type or be normal. With its ability to manipulate and manufacture images, AI could compromise our individuality and self-discovery, our abilities to become things that don’t already exist,” said Lizzy Brooks, who teaches Community Computing at Lick-Wilmerding High School. Generative photo editing creates the possibility of idealizing one’s memory to the extent that it negates all human errors and deficiencies. AI leaves little space for humans’ innate imperfections.

As it grows in presence and capacity, AI easily permeates one’s own experiences. Psychologists and technologists agree that AI is changing its users’ brains as a whole. Increasing developments have raised the question: When do technology experts say ‘enough is enough?’ Just because something can be further innovated, does it need to be?

Governments, organizations and researchers are making concerted efforts to establish guidelines and frameworks that govern the development and deployment of AI systems. For instance, the European Union has introduced the General Data Protection Regulation (GDPR), which includes provisions related to the processing of personal data by AI algorithms. International organizations, such as the Organization for Economic Coorperation and Development, are working on creating global standards for AI governance. These initiatives aim to address concerns related to privacy, bias, transparency and accountability in AI systems, reflecting a proactive approach to harnessing the benefits of AI while mitigating potential risks.

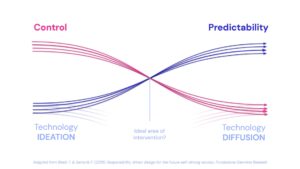

However, there is another more hopeful approach to AI. Haley Williams and Chai Peddeti are two nuclear engineering Ph.D. students at UC Berkeley whose work often concerns the regulation and ethics of nuclear engineering. They both referenced an idea called the Collingridge dilemma, introduced by David Collingridge, to highlight the difficulty of predicting and controlling the societal impacts of technology. Collingridge emphasized the challenges in regulating technology effectively, especially in its early stages.

The dilemma suggests that during the initial phases of technology development, when the outcomes are uncertain, regulating the technology is challenging. However, once the negative consequences become apparent, implementing significant changes to counteract the technology may be too late. Essentially, technologists do not know the dangers of what they create until it is often too late to go back.

“The question becomes, how do we anticipate how technology will change our society while still giving room for innovation?” said Williams. Peddeti agrees. “Just because we’re scared of an outcome doesn’t necessarily mean that we shouldn’t do it. The only reason we’re scared is because we don’t fully understand all the outcomes that are possible,” he said.

Both supporters and dissenters of AI agree that there needs to be a focus on when to intervene in the development process of any technology; the question simply becomes when that time should be.

As much as AI has come to define the world, many people believe it should not define who they are as people. “Even though AI enhances our normative tendencies, we were already doing that way before these technologies became available. Even then, it was already a step of increased consciousness to rebel against the norm. I think that we still have the muscles to rebel and do our own thing even amidst AI,” Brooks said.

Brooks is not alone in this viewpoint. Technologists such as Andrew Ng, Elon Musk and Gary Marcus all believe that despite AI’s ever-growing influence, our agency as individuals remains a formidable force. They stress the innate human ability to defy norms and shape unique paths, which is seen as vital to affirming humans’ distinct essence. The future of AI is uncertain, but human agency will determine how much of a presence it plays in our lives.